CN / EN

CN / EN

CHINGMU Spotlights at Zhongguancun Embodied AI Robot Competition, Boosting Innovation with Mocap

On November 17-18, 2025, the finals of the Zhongguancun Embodied AI Robot Application Competition were successfully held in the Beijing Zhongguancun National Independent Innovation Demonstration Zone. As the technical support unit for the competition, CHINGMU made an appearance at the event, showcasing a full-process robot development solution based on high-performance optical motion capture.

Empowering the Entire Competition Cycle with Motion Capture Technology

The competition focused on the practical application of embodied AI, featuring cutting-edge fields such as humanoid robots, robot dogs, robotic arms, and dexterous hands. It was structured around three main tracks: a Model Capability Challenge, a Scenario Application Contest, and Academic Frontiers & Industrial Ecosystem, encompassing 19 core tasks across various typical scenarios including home service, commercial service, industrial manufacturing, and safety disposal. The aim was to promote the development, implementation, and industrial integration of embodied AI technologies.

As a global leader in motion capture, CHINGMU leverages its profound expertise in the embodied AI field to provide comprehensive technical support and solutions for such competitions. It assists participating teams, judging systems, industry experts, and other stakeholders in achieving a closed-loop technical path of "Training-Evaluation-Optimization," offering reliable support for both the competition and industrial applications.

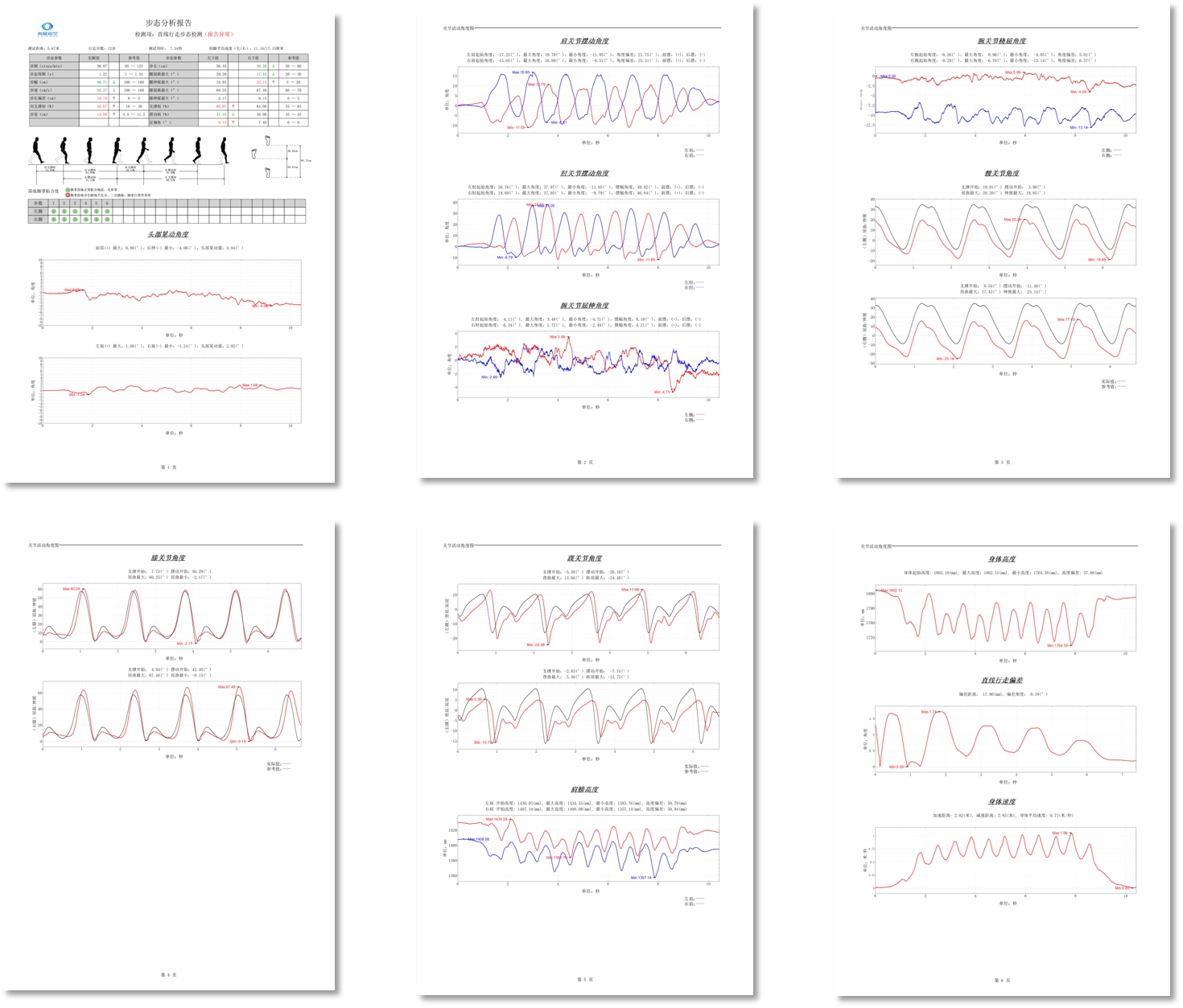

Pre-Competition: Data-Driven Robot Training and Algorithm Tuning

In the robot R&D phase, acquiring high-quality, high-precision training data is crucial for algorithm training and model optimization. Based on its self-developed optical motion capture system, CHINGMU has built a data factory solution covering the entire process of venue design, equipment deployment, data acquisition, and data cleaning. This facilitates the efficient construction of high-quality datasets and provides comprehensive trajectory information for robot motion learning.

The system captures the motion trajectories of a robot's full body or specific parts with sub-millimeter accuracy in real-time, supports multi-modal data fusion, and, combined with open protocols and multi-platform SDKs, enables seamless integration of motion data with simulation platforms. It is widely applicable for:

Dexterous hand fine manipulation learning;

Humanoid robot gait and dynamic balance training;

Bionic motion modeling and joint control optimization.

During Competition: High-Precision Real-time Capture for Objective Robot Performance Evaluation

During the competition evaluation phase, the CHINGMU optical motion capture system provides professional-grade performance assessment support. Through high-precision positioning and millisecond-level real-time feedback, it establishes a comprehensive quantitative evaluation system, offering objective and accurate data support for judges' decisions.

The system supports multi-target synchronous tracking and multi-modal data acquisition. Combined with customizable evaluation metrics and automated reporting functions, it accurately captures the posture stability, trajectory accuracy, and collaborative performance of robots in dynamic tasks. It is suitable for performance evaluation in the following typical scenarios:

Humanoid Robots: Gait stability, obstacle-crossing capability, grasping accuracy.

Robot Dogs: Terrain adaptability, load stability, anti-interference capability.

Dexterous Hands: Grasping success rate, force control, multi-finger coordination.

Robotic Arms: Trajectory accuracy, repeatable positioning, end-effector tremor.

Multi-Robot Collaboration: Formation keeping, task allocation, collaborative obstacle avoidance.

Post-Competition: In-depth Data Retrospection Drives Continuous Robot System Optimization

After the competition, the CHINGMU motion capture system continues to provide extended data value for participating teams and organizers, supporting in-depth data analysis and algorithm iteration to promote the closed-loop improvement of robot performance and technological accumulation:

Motion Trajectory Retrospection: Replay key action segments and identify control bottlenecks and anomaly nodes through data playback and multi-format export.

Algorithm Iteration Verification: Validate the effectiveness and robustness of optimized algorithms based on real-scenario data.

Performance Report Generation: Automatically generate standardized evaluation reports to provide a basis for structural improvements and strategy tuning, facilitating scientific publications and product optimization.

Currently, CHINGMU has established deep collaborations with companies such as Honor, Unitree Robotics, Astribot, Humanoid Robot (Shanghai) Co., Ltd., Zhejiang Humanoid Robot Innovation Center, as well as with numerous universities including Tsinghua University, Zhejiang University, and Soochow University. Together, they conduct cutting-edge research on high-precision finger motion training, dexterous hand control, and teleoperation interaction, continuously expanding the application boundaries of optical motion capture technology in the field of embodied AI.

With the rapid development of the humanoid robot industry, CHINGMU will continue to advance the technological evolution and practical application of motion capture systems. It is committed to building high-precision motion perception and evaluation capabilities in various fields such as industrial automation, home services, medical rehabilitation, and emergency rescue, providing reliable technical support and data assurance for various embodied AI competitions and R&D projects.