CN / EN

CN / EN

CHINGMU: The Code Behind the Elegant Dance Moves of Unitree and Zhiyuan Humanoids

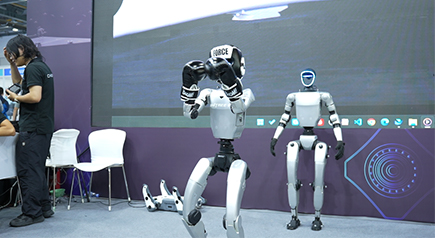

When Unitree’s latest H2 humanoid robot dances to the beat, and when Zhiyuan Robotics' G2 dexterous hand steadily draws a bow and shoots an arrow, behind these seemingly fluid "human-like movements" lies a core support that is easily overlooked—motion capture technology.

At the 2025 IROS International Conference on Robotics and Automation exhibition, the optical-inertial hybrid motion capture equipment exhibited by CHINGMU became a focal point. Using only 2-3 cameras, it can accurately capture the subtle movements of finger joints and operate stably in environments with strong reflections like glass and metal. This addresses the industry pain points of traditional motion capture: "data loss due to occlusion" and "inertial drift."

As a leading enterprise in the domestic motion capture field, CHINGMU CEO Zhang Haiwei revealed in a recent exclusive interview with the "Robotics Lecture Hall" that the value of motion capture technology for humanoid robots goes far beyond simply recording movements. It is not only the coach that teaches robots to walk and work but also the examiner that tests robot performance, and even a key infrastructure solving the industry's data scarcity and promoting robot deployment.

From the movement optimization of Unitree's humanoid robots to the development of Zhiyuan's dexterous hands, from lab performance evaluation to factory skill assessment, motion capture is becoming an invisible force pushing humanoid robots from "lab prototypes" to "industrial products."

▍The Three-Step Evolution of Humanoid Robots

"A humanoid robot fresh off the production line is like a newborn child—it needs to be taught and examined." Zhang Haiwei used a vivid metaphor to highlight the core value of motion capture technology.

In the humanoid robot industry, the role of motion capture focuses on two main areas: "Training" and "Evaluation." These two areas are further subdivided into three levels: "Locomotion Intelligence," "Task Intelligence," and "Interaction Intelligence," progressively pushing robots from "being able to move" to "moving well."

The "coach" role of motion capture is first reflected in helping robots establish Locomotion Intelligence, the most fundamental survival skill. This is the training side that enables robots to learn to act like humans. For example, the dance moves of Unitree's H2 or the jumping postures of Robots are essentially achieved by recording human major joint movement data (like hip and knee joint angle changes) with motion capture equipment and then "replicating" this data onto the robots.

Zhang Haiwei explained that the core of Locomotion Intelligence is to enable robots to master balance and coordination. Data on how the center of gravity shifts during walking or how joints coordinate during turning all need to be collected through high-precision motion capture. More important than being able to run and jump is the robot's Task Intelligence—the ability to "get work done." This is also the core requirement behind companies like Zhiyuan and Ubtech developing dexterous hands.

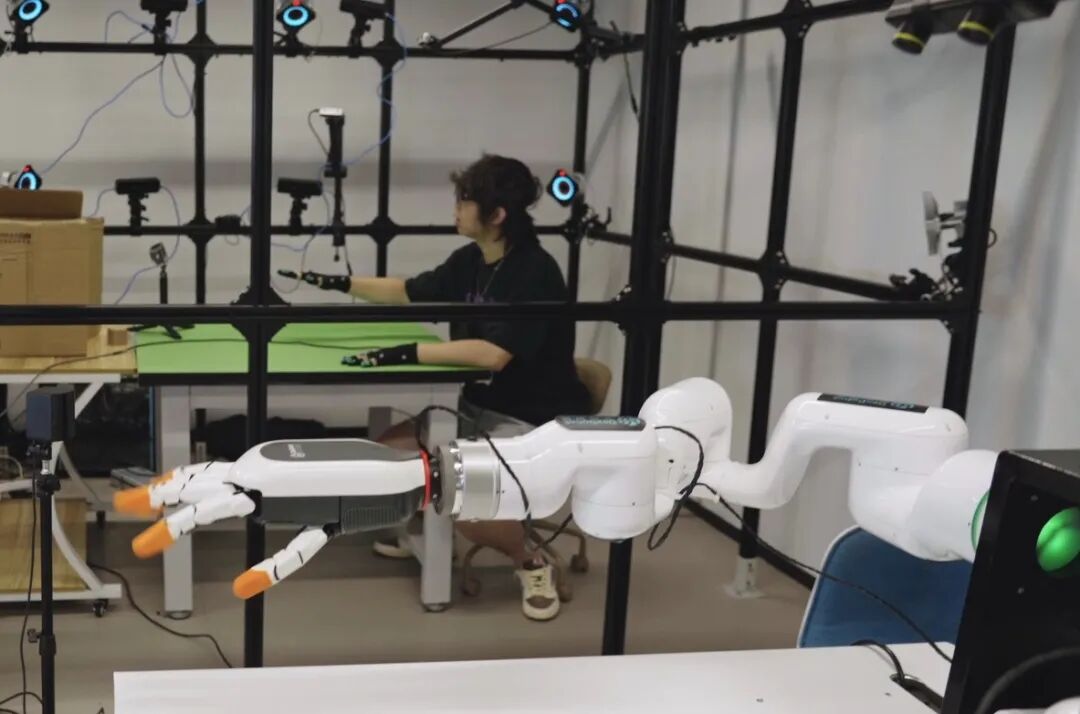

It is reported that CHINGMU launched optical finger motion capture equipment as early as last year, capable of capturing subtle human finger movements like flexion, extension, and grasping. Most domestic dexterous hand companies, such as Lingqiao Intelligence, are using this set of equipment to train their robots.

"For example, when grabbing a cup, human fingers adjust the force based on the cup's shape. The bending angle error of the fingertip joints cannot exceed 1 degree. Traditional motion capture simply couldn't achieve such precision," Zhang Haiwei illustrated. "But CHINGMU's finger motion capture uses actively illuminated encoded Markers to distinguish the joint positions of each finger, and can even capture data on force changes like 'pinching a piece of paper.'"

In Zhang Haiwei's view, the longer-term goal for humanoid robots is Interaction Intelligence—enabling robots to interact freely with people and the environment. This scope is broader than Task Intelligence: not only interacting with objects, like tightening screws or opening doors, but also interacting with people, like avoiding a person's arm when handing over an object, and even collaboration between robots.

"This is true Embodied Intelligence," Zhang Haiwei emphasized. "For instance, two robots cooperating to assemble a part in a factory—one hands the tool, the other tightens the screw. Their action coordination requires precise spatiotemporal synchronization. This requires motion capture equipment to record the action logic of human collaboration and then translate it into interaction data for the robots." This is the foundation for humanoid robot deployment.

▍From "Coach" to "Examiner"

If training is about teaching skills, then evaluation is about "qualifying."

During the R&D phase of such humanoid robots, motion capture (mocap) undoubtedly serves as a performance optimization tool. For example, CHINGMU's optical motion capture system can record the movement trajectories of a humanoid robot's hip and ankle joints. By comparing these data with normal human walking patterns, it helps identify the root causes of abnormal motions in the robot—whether it's an angle error in the knee joint or mistimed weight transfer during gait.

"Just like giving a robot a motion CT," said Zhang Haiwei, "traditional tuning relies on manual observation, which is prone to errors and inefficient. In contrast, motion capture can control motion accuracy at the sub-millimeter level, improving tuning efficiency by more than 10 times."

By the production stage, motion capture becomes the "quality inspector." This can be compared to the evaluation logic of robot vacuums: while vacuums need to test obstacle avoidance accuracy and path planning errors, humanoid robots require testing indicators such as walking stability and repeat positioning accuracy.

For example, if a manufacturer claims the robot "can walk continuously for 10 kilometers without falling," motion capture equipment will record its gait data on different terrains, even simulating scenarios with slight collisions to test its anti-interference capability.

"Many companies now say their robots don't fall when kicked, but with how much force? On what kind of ground? These need quantitative evaluation through motion capture," Zhang Haiwei added. Currently, some domestic institutions have partnered with CHINGMU to set up humanoid robot evaluation lines, focusing on testing "repeatable positioning accuracy." For instance, the error when a robot repeatedly grabs a screw from the same position must not exceed 0.5 millimeters. This is a core indicator for ensuring automated factory production.

When robots enter application scenarios, motion capture can also be responsible for skill assessment.

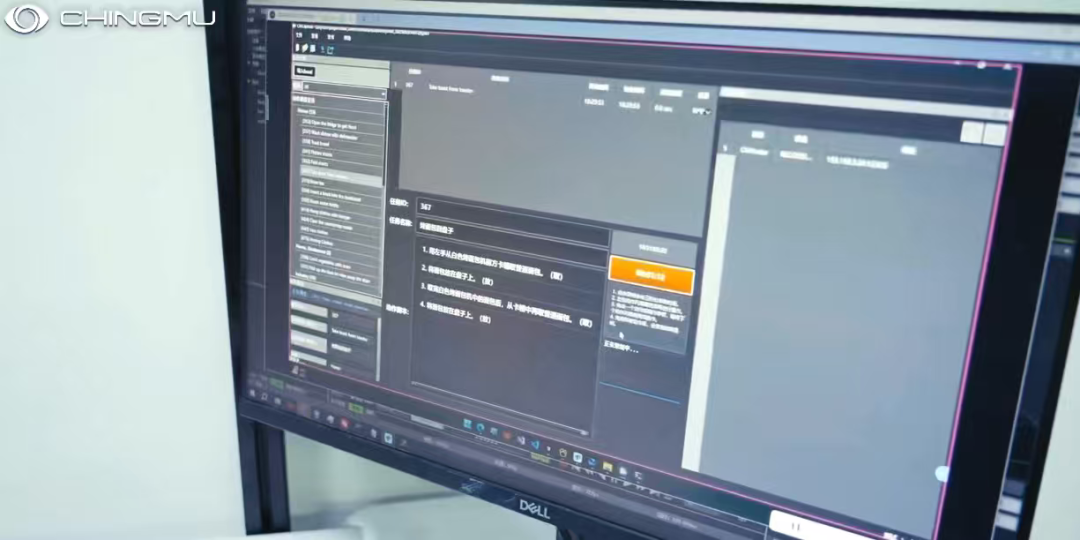

Just as humans need to pass driving tests , robots need to pass "skill tests" before they can work in factories. For example, for the "screw tightening skill," motion capture records the robot's rotation speed, force, and angle when tightening a screw to determine if it meets the factory's production standards. Another example is the "home service skill," testing whether it avoids hitting people when handing over objects or pinches fingers when opening doors.

"Only robots that pass the evaluation can truly be deployed," Zhang Haiwei stressed. "This is also a key focus CHINGMU is advancing—collaborating with inspection institutions to use motion capture to establish a skill standard system for humanoid robots, further promoting the standardization of humanoid robots."

▍Why the "Optical-Inertial Hybrid" Solution is the Future of Motion Capture

Despite its significant value, traditional motion capture technology has always faced two "bottleneck" problems: optical motion capture fears "occlusion," and inertial motion capture fears "drift."

These two problems are particularly prominent in the humanoid robot field. The robot's fingers and joints easily occlude Markers, and the cumulative error of inertial motion capture can cause the robot to "deviate more and more."

The "Optical-Inertial Hybrid Motion Capture Solution" launched by CHINGMU at the IROS exhibition is precisely an innovative solution targeting these pain points. This solution integrates the "high precision" of optical motion capture with the "continuity" of inertial motion capture, and also incorporates unique designs like active illumination encoding and removal of magnetometers, significantly improving the applicability of optical motion capture in robot scenarios, achieving a truly usable "optical-inertial fusion" solution.

Zhang Haiwei explained to us that traditional optical-inertial hybrid systems mostly use "loose coupling," where optical and inertial systems each output complete motion data, which is then fused by averaging. The drawback of this method is obvious: when optical data is lost, the drifting inertial data pulls down the overall accuracy; when inertial data drifts, the occluded optical data cannot correct it.

CHINGMU's "tight coupling" solution is completely different. It does not rely on the finished data from optical or inertial systems but directly calls their raw data—the image pixel information from the optical system and the acceleration and angular velocity information from the inertial system—calibrating each other in real-time through algorithms.

For example, when a finger occlusion causes the loss of an optical Marker, the raw inertial data temporarily "fills in," but simultaneously references the position information previously recorded by the optical system to avoid drift; when slight errors occur in the inertial data, the optical pixel data corrects it in real-time.

"The loose coupling solution is like 5 experts scoring independently and then averaging, with no communication—the shortcomings stack up. But the tight coupling solution is like 5 experts discussing together before scoring—advantages complement, disadvantages cancel out," Zhang Haiwei explained. This solution enhances the "data continuity" of motion capture while maintaining higher positioning accuracy, fully meeting the real-time training needs of robots.

The "hardcore design" of removing magnetometers + active illumination to adapt to complex scenes is another highlight of CHINGMU's solution.

Another pain point of inertial motion capture is "magnetic field interference." Mobile phones, computers, and metal equipment can all affect the direction judgment of the magnetometer, causing the robot to "deviate." CHINGMU's solution is straightforward: remove the magnetometer and use optical data to calibrate the inertial direction in real-time, fused with certain algorithms, thus彻底solving the drift problem.

Aiming at the problems of traditional optical motion capture being afraid of reflections and noise, CHINGMU innovated with "actively illuminated encoded Markers." Traditional Markers require camera lighting for reflection, which is easily interfered with by reflections from glass and metal. Even dust in the air can create "pseudo-Markers." CHINGMU's Markers, however, illuminate themselves. Each LED has a unique flashing frequency code, like giving each Marker an "ID card." By recognizing the flashing code, the camera can distinguish between them. Even when working next to glass tables or metal parts, data packet loss does not occur.

"Previously, capturing finger motions required setting up over 20 cameras to avoid occlusion. Now, 2-3 are enough," Zhang Haiwei gave an example. This solution not only reduces equipment costs (by 80% in camera count) but also simplifies deployment, allowing quick setup of motion capture environments in factory workshops and home kitchens. It can even move with workers on production lines for mobile data collection, laying the foundation for "accompanying data collection" in real-world scenarios for humanoid robot deployment.

▍Solving the Humanoid Robot's "Data Thirst"

"Currently, the entire industry is stuck on data. Without data, robots can't work properly," Zhang Haiwei stated bluntly. The data demand of humanoid robots far exceeds that of ChatGPT or autonomous driving.

Because ChatGPT only needs text data, autonomous driving is "two-dimensional space without interaction," while humanoid robots are "three-dimensional space with strong interaction," requiring multi-dimensional data including motion, touch, environment, and object properties. The data volume might be over 1000 times that of autonomous driving.

Addressing this pain point, CHINGMU is advancing the construction of a "High-Quality Humanoid Robot Dataset" and has proposed a "Multi-dimensional Quality Standard," aiming to solve the industry's problems of "scarce data, low quality, and lack of universality."

The first standard for high-quality data is "Multi-modality," because humanoid robots cannot rely only on motion data; they also need touch, environment, and object data. For example, for a simple "screw tightening" action, besides the movement trajectory of finger joints, it's necessary to collect pressure changes at the fingertips (touch), the material hardness of the screw (object property), and the height of the workbench (environmental data). "When a robot tightens a screw, too much force will strip the screw, too little won't tighten it properly. This requires touch data support," Zhang Haiwei explained.

Furthermore, this data needs to achieve the more critical "Spatiotemporal Alignment," meaning motion, touch, and environmental data must be fully synchronized in time and space. For instance, the moment force is applied by the finger, the corresponding pressure data and the screw's position data must be from the exact same time point. Otherwise, the trained robot might have "mismatched actions and force." CHINGMU's motion capture equipment can already achieve "microsecond-level spatiotemporal synchronization," ensuring consistent "timestamps" for all data, providing precise support for subsequent training.

The second standard for high-quality data is the "Three Highs": High Precision, High Sensitivity, and High Degrees of Freedom.

High Precision goes without saying—movement error must be controlled within 0.1 millimeters. High Sensitivity targets touch data—for example, a force change of 0.1 grams when a finger touches an object should be capturable. High Degrees of Freedom serves the "Generalizability" of humanoid robots—the data must cover the full joint movements of fingers, arms, and torso. Even complex actions like "using tweezers to pick up a hair" or "using a key to open a door" must be completely recorded.

"If the dataset has low degrees of freedom, the trained robot can only perform simple actions, unable to use tools or adapt to different scenarios," Zhang Haiwei emphasized. Only truly high-quality data can ensure it supports the robot's "general manipulation," covering more scenarios and being easier to migrate and apply to different robot platforms.

Thirdly, the data must be "Real." Because traditional data collection requires building simulated scenes, like setting up a simulated production line in the lab, which is costly and unrealistic. CHINGMU's innovation is "Accompanying Collection." This "real-scenario data" is more valuable than lab-simulated data. "We can't build 1000 different production lines in the lab, but we can go to 1000 factories to collect data," Zhang Haiwei exemplified. Accompanying collection not only reduces costs but also captures "human implicit skill experience." This experience cannot be simulated in the lab but is crucial for robot deployment and optimization.

Fourthly, the data should have simple post-processing, making it usable and easy to use. Having also a deep involvement in the film and animation industry, CHINGMU found that most motion capture data collected for films required 10 days to "clean" 1 day's worth of data. But the robotics industry lacks expertise in "data cleaning" and cannot afford the cost. CHINGMU's dataset achieves "simple post-processing," with collected data noise below 1%, usable directly for training without fine-tuning. Humanoid robots need real-time usable data, not finely polished data. This has become one of the core advantages making CHINGMU's data collection trusted by leading companies like Unitree and Zhiyuan.

▍Conclusion: Motion Capture, More Than Just "Recording Movements"

When robots accumulate enough expert movement data, they can even reversely "teach humans."

In the conversation, Zhang Haiwei described a future integrating humanoid robots with motion capture devices. For example, a badminton coach robot could compare a student's movements with those of a professional athlete via motion capture and correct postures in real-time. A skilled worker robot in a factory could demonstrate the "high-precision screw tightening" action, helping new workers learn quickly.

"Robots can aggregate the experience of 100 experts, making them more precise than human coaches," Zhang Haiwei said. This is also the extended value of embodied intelligence, enabling robots, based on motion capture devices, to transform from "tools" into "teachers."

In CHINGMU's planning, the deployment of humanoid robots might proceed in three steps: The first step is the factory scenario, using motion capture to train robots to perform standardized actions like screw tightening and part assembly. The second step is the healthcare scenario, training robots to assist the elderly with dressing, handing medicine, focusing on solving flexible interaction problems. The third step is the home scenario, enabling robots to learn cooking, cleaning, and childcare, which requires more accompanying data support from home environments, where the "training + evaluation" capability of motion capture technology is indispensable.

Today, from the dance optimization of Unitree's H2 to the precise grasping of Zhiyuan's dexterous hand, from the optical-inertial hybrid equipment at the IROS exhibition to the evaluation line at the Zhejiang Institute of Quality Science, CHINGMU is using technology to prove that motion capture is not just the "exclusive tool" of the film and animation industry, but can also become the "infrastructure" for the humanoid robot industry. It solves the challenge of robots "learning movements," establishes the standard for robots "qualifying," and addresses the industry's pain point of "data scarcity."

When more robots master precise movements through motion capture technology, when more companies use high-quality datasets, and when motion capture becomes the sensory capability of robots, the day when humanoid robots move from laboratories to homes and factories might be closer than imagined.