CN / EN

CN / EN

Application of Positioning System in XR/Virtual Photography

Application:XR,Virtual shooting,Motion capture,Optical positioning

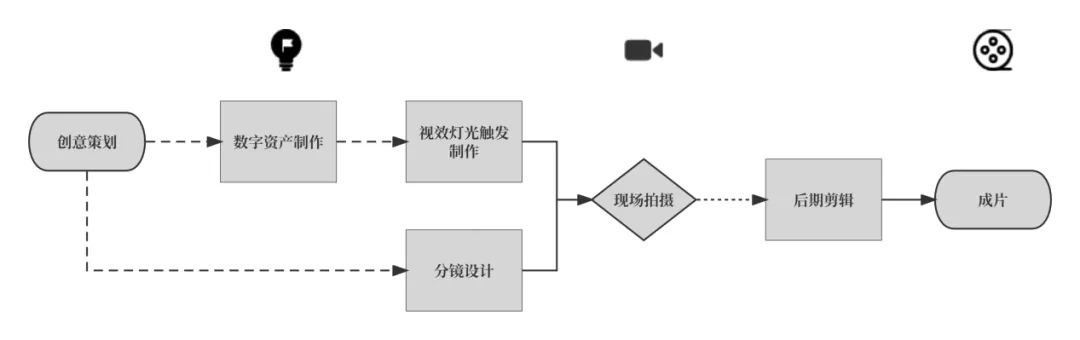

At the beginning of the 21st century, with the rapid development of computer real-time rendering technology, live interactive rehearsal technology in front of green screens emerged. In the actual shooting process, the real shooting elements in front of the green screen are synthesized with virtual elements in real time on site. However, due to limited rendering and cutout quality, the real-time output images on site are generally only used as shooting references and not as final images. The final high-quality image still needs to be achieved through post production synthesis.

In recent years, with the continuous advancement of technology and the accelerated innovation of film and television industrialization, a new generation of LED background wall virtual shooting system based on XR technology has emerged - XR/virtual shooting.

What is XR/Virtual Photography?

Simply put, it is an innovative filming method that utilizes virtual reality and augmented reality technology to construct virtual scenes in real scenes and achieve interaction between real actors and virtual characters to achieve filming effects. Also known as "Extended Reality" technology.

The key to XR shooting lies in using a dedicated camera for position tracking and combining it with the background screen for real-time rendering. By integrating real shooting scenes with virtual backgrounds, the "infinite expansion" of the background can achieve a comprehensive construction of the virtual world on the screen, making the shooting effect of the film more realistic, rich, and natural.

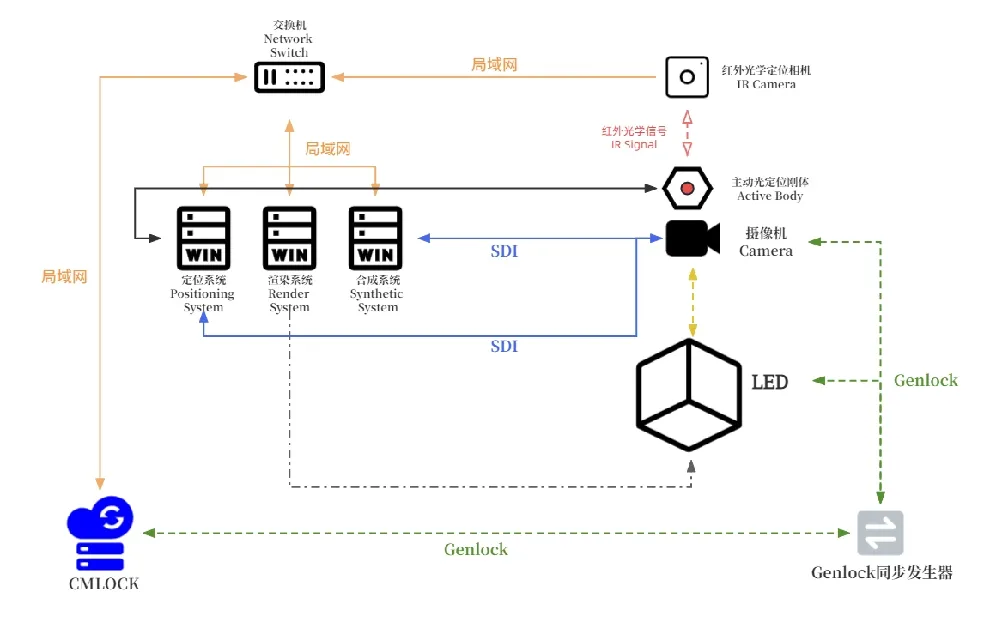

The XR/virtual shooting system consists of core components such as synthesis rendering server, positioning server, camera tracking system, camera, LED screen, and synchronization system. Among them, LED screens, as display systems, can create the ultimate immersive scene in limited space.

In the actual shooting process, the scenes we see are usually divided into three levels: background, mid shot, and foreground. Except for the middle scene (physical part), the other two scenes are generated by a 3D rendering engine to create realistic virtual scenes, which is different from the traditional green screen shooting method.

In traditional green screen shooting, both real and virtual elements are filmed in front of the green screen. Now, with XR/virtual shooting technology, we can replace these elements with virtual scenes, creating infinite possibilities within a limited shooting space.

XR/virtual shooting has gradually become digital entertainment

The preferred efficient creative tool for professionals in the field

The impact of XR/virtual shooting technology on the field of film and television entertainment is revolutionary and far-reaching, and has become an indispensable part of film and television production. Compared to traditional green screen shooting, its most obvious advantage is the significant reduction in the need for manual intervention and post production.

Through automation and intelligent technology, XR/virtual shooting technology can not only significantly reduce production costs, but also improve shooting efficiency and quality. It enables creators to easily create realistic scenes and special effects, greatly enhancing the audience's viewing experience.

Overall, XR/virtual shooting technology is an essential and efficient creative tool for creators in the film and entertainment industry, helping them create high-quality works more easily.

In the actual XR/virtual shooting workflow, camera positioning is a crucial step. If the camera positioning is not accurate, it can cause misalignment between the LED screen wall and the physical objects in front of the screen (such as actors and props), which seriously affects the quality of the final image.

Motion capture technology plays a core role in camera positioning and tracking in XR/virtual shooting

As one of the key technologies for XR/virtual shooting, motion capture systems can track and record the camera's motion in space, synchronize it with the virtual background, and ensure precise matching between the virtual scene and the real camera position.

In addition, motion capture technology can also be used to track the movements of actors and props in real-time and map them to the virtual world, achieving seamless integration between reality and virtuality.

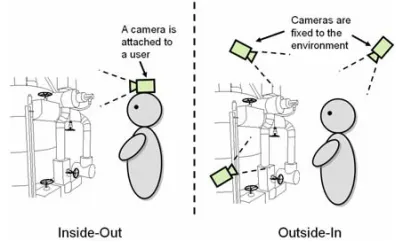

From the perspective of tracking methods, tracking and positioning systems can be divided into two categories: Inside Out tracking and Outside In tracking.

1.Tracking from inside out

The position sensor is placed inside the tracked object, and the most commonly used tracking sensors from inside out include cameras, infrared (IR) lasers, and inertial measurement units (IMUs). Generally speaking, it determines its position and posture by recognizing changes in the surrounding environment.

For example, as humans, we need to determine our own position in any environment in order to determine whether to move forward, backward, or turn in a circle. This positioning method is similar to using our naked eyes to constantly recognize the surrounding environment and confirm our position.

This positioning method may be more direct and straightforward, but it is also susceptible to some external limitations, such as sudden confusion and loss of direction when we are in a completely unfamiliar environment, such as waking up in a strange house after a hangover, or a small boat in the center of the sea without any reference objects such as large ships or navigation marks, which can lead to a loss of perception and judgment of our own position.

In the tracking and positioning of XR/virtual shooting, it can be understood as follows: for the jumping changes in the external environment, the tracking method from inside to outside cannot rely on itself to do self positioning, thus losing the original tracking function. In the actual technical operation process, it is necessary to re calibrate the position data, which will directly lead to a lot of time and labor cost waste, making the overall work efficiency of the shooting team lower.

2.Tracking from outside to inside

The position sensor is placed outside the tracked object, usually in a fixed position and facing the tracked object. In essence, we calculate the pose information of the tracked object by determining the relative relationship between the tracked object and the sensor. For example, the well-known satellite positioning technology is Outside In tracking, which uses multiple satellites to triangulate the tracked object and obtain its position information.

The most intuitive explanation is that regardless of whether we are familiar with the surrounding environment or not, when we get lost, we can quickly confirm our location by using tools such as mobile phones or cars to open map software. Simply put, in practical applications, the biggest difference between this outward to inward tracking and positioning method and the inward to outward positioning method is that as long as the captured object is within range, it can be accurately positioned with precise location information.

Of course, it should be noted that when using optical motion capture technology, controlling the natural light on the field is crucial. If natural light is too strong, it may affect the capture effect. In addition, implementation may be subject to certain limitations.

To solve these problems, an active infrared optical scheme can be used. Its advantages lie in the ability to capture targets at a long distance, good stability, and strong resistance to shaking. Therefore, adopting an active illumination scheme in XR positioning can increase the camera's capture distance, enabling motion capture not only in indoor environments but also in strong natural light environments outdoors.

The biggest feature of using the Outside In positioning method is that it is not limited by the camera's motion trajectory and is widely used in virtual shooting environments such as XR/VP/Greenbox. It is also possible to accurately determine the positions of multiple cameras simultaneously through a set of equipment, and capture the full body movements of cameras, real actors, props, and costume wearing actors in the same motion capture studio. Real time interaction between real and digital people can be displayed, thus achieving consistent shooting effects with expectations, saving shooting space, shortening post production time, and reducing post production costs.

In the movie 'King of the Sky', XR/virtual filming technology was fully utilized. CHINGMU has achieved technological features such as high precision, low latency, and multi position tracking through its independently developed optical motion capture system. By using the Outside In positioning method to track the motion of one or more cameras, accurately locate the camera position and actor movements, and perfectly combine them with special effects.

Through the joint efforts of the production team, the realistic flight scenes were restored, providing actors with an immersive filming environment. By utilizing the innovative technology of XR/virtual shooting, the creative team of "The King of Longair" was able to overcome difficulties in presenting images such as spatial, perspective, and motion, ultimately presenting a highly impactful visual effect to the audience.

Due to the resolution limit of the optical system, as well as the frequent occurrence of camera occlusion or ineffective capture during actual XR shooting, there may also be issues with marker point jitter in the optical camera view.

To solve these problems, we can adopt an optical inertial hybrid algorithm, which integrates inertial sensors into the camera to achieve synchronization between optical positioning data and inertial positioning data in the software, and effectively fuses these two types of data to smooth the positioning curve, thereby significantly optimizing the XR shooting effect.

These algorithms can be implemented using the data processing and calculation functions of the optical system's built-in software. The synchronization system is used to synchronize the data of the optical motion capture camera and other XR system devices with the output signals of different devices. Then, mainstream third-party software such as Aximmetry, Pixotope, Disguise, and Hecoos are used for further processing, ultimately presenting us with high-quality works.

XR/virtual shooting has a wide range of application scenarios in the field of digital entertainment

The advantages of using XR/virtual shooting technology, including saving manpower and time costs, enriching creative space, improving shooting and production efficiency, and real-time visualization of images, have made this technology widely used in fields such as movies, TV dramas, and games.

In addition, it has also become an important development direction of the digital entertainment industry. In specific scenarios such as film and television production, advertising shooting, product launches, stage variety shows, art exhibitions, virtual concerts, live broadcasts, etc., XR/virtual shooting technology brings high-quality and realistic visual content presentation to the audience. Through this technology, the audience can obtain more diverse visual experiences and create a sense of immersion.

XR/virtual shooting has opened up a new creative field, bringing a stunning audio-visual feast to the film and entertainment industry! CHINGMU focuses on optical motion capture full process solutions and XR/virtual shooting product services, striving to create the most professional and top-notch visual effects. With rich experience and unique technology, we create real-time, precise, and immersive visual effects for creators, allowing film and entertainment creation to surpass imagination and achieve infinite possibilities!