CN / EN

CN / EN

IROS2025 | ZJU: Dual-Arm Rob. Dex. Manip.Via Masked Vis-Tact-Motor Pre-Train. & Object Understanding

Research on Dexterous Manipulation of Dual-Arm Robots

To address the above issues, researchers including Sun Zhengnan from the team of Ye Qi (Institute of Industrial Control, College of Control Science and Engineering, Zhejiang University) conducted the study titled VTAO-BiManip: Research on Dexterous Manipulation of Dual-Arm Robots Based on Masked Visual-Tactile-Motor Pre-Training and Object Understanding. The study aims to realize human-like dual-arm robot manipulation through multimodal pre-training and curriculum reinforcement learning. This research result has been published at IROS, a well-known conference in the robotics field.

1.Research Scheme

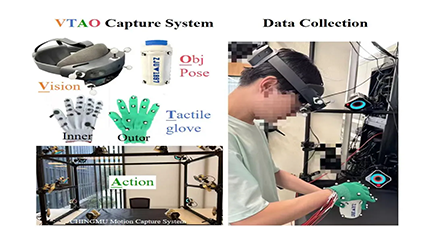

The research team designed and built a VTAO (Visual-Tactile-Action-Object) data collection system to capture human dual-hand manipulation demonstration data. The system includes: 1) Hololens2 for visual data collection; 2) dual-hand tactile and motion capture gloves; 3) Qingtong Visual Motion Capture System for accurately capturing hand movements and 6-degree-of-freedom (6-DoF) object poses; 4) a personal computer for data collection and alignment. Through this system, 216 human dual-hand manipulation trajectories were collected, involving 26 different bottles. Each trajectory lasts 6–17 seconds, with a total of 61,684 frames of time-aligned multimodal data.

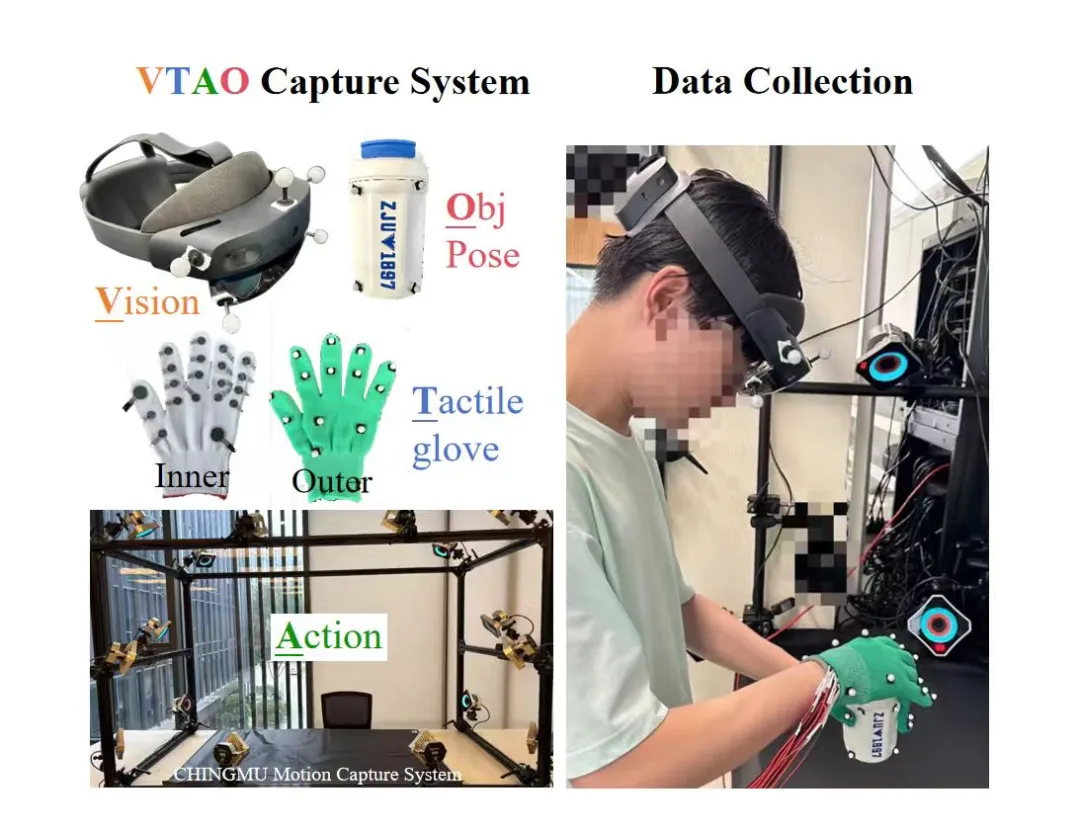

Based on the Masked Autoencoder (MAE) architecture, the research team proposed the VTAO-BiManip pre-training framework, which adopts an encoder-decoder structure: the encoder processes visual, tactile, and current action inputs, and projects information from different modalities into latent tokens via an attention mechanism; the decoder reconstructs the original perceptual modalities, predicts subsequent actions, and estimates object states. This joint reconstruction mechanism enables cross-modal feature fusion during pre-training, allowing the model to recover complete environmental interactions from partial perceptual observations.

To address the challenge of multi-skill learning, the research team adopted the Proximal Policy Optimization (PPO) reinforcement learning strategy and introduced a two-stage curriculum reinforcement learning framework: the first stage fixes the bottle on the table to encourage the left hand to learn bottle grasping and the right hand to learn bottle cap unscrewing; the second stage releases the bottle to force the learning of dual-hand collaborative manipulation skills.

Innovation and Advantages of the Scheme:

- Introduced action prediction and object understanding as supplementary modalities to achieve cross-modal feature fusion.

- Designed the VTAO data collection system to capture multimodal demonstration data of human dual-hand manipulation.

- Proposed a two-stage curriculum reinforcement learning framework to effectively address the challenge of dual-hand sub-skill learning.

4.Verified the method’s effectiveness in both simulated and real environments, with a success rate exceeding existing methods by more than 20%.

2. Experimental Verification

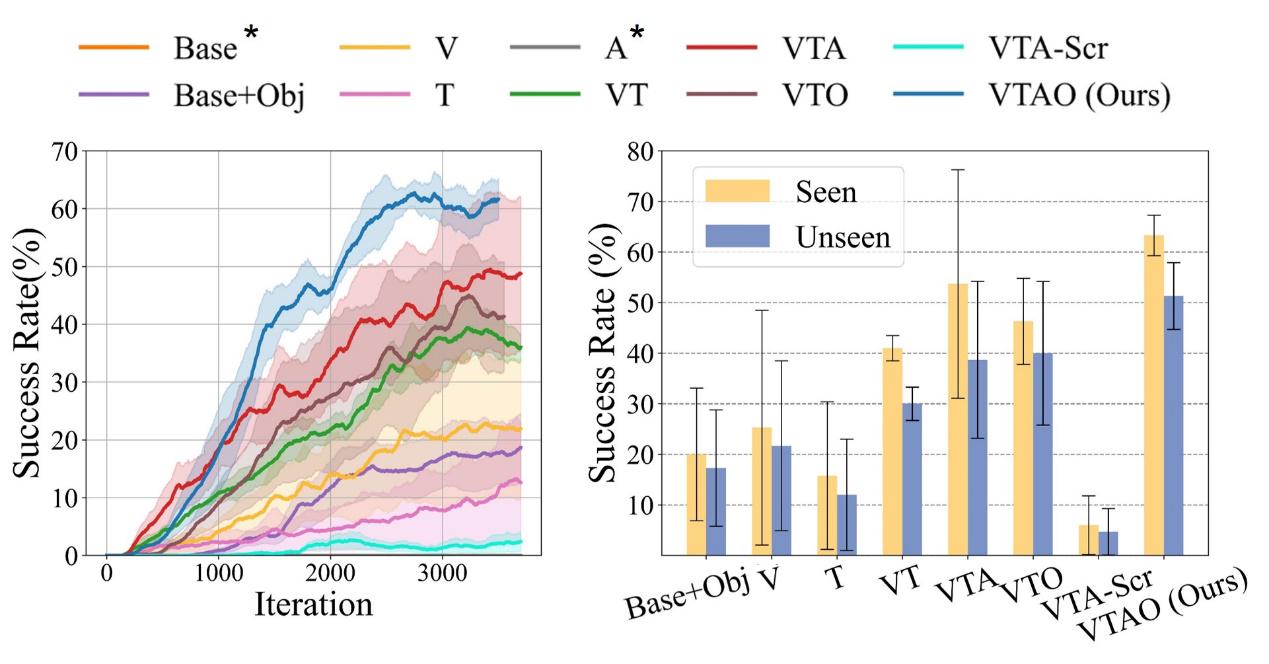

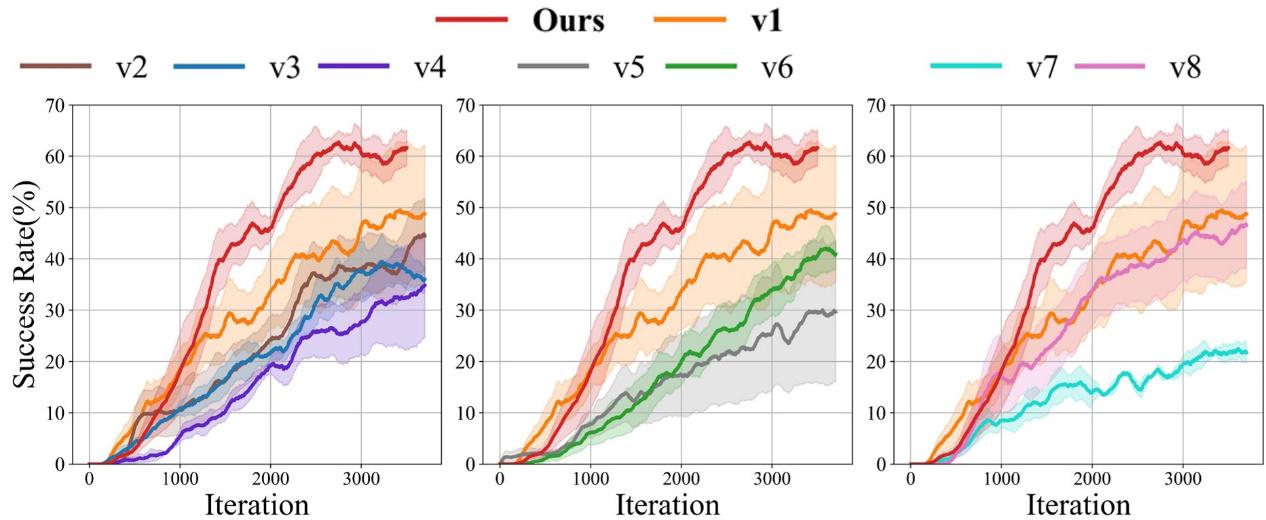

The experiment deployed the dual-hand bottle cap rotation task in the Isaac Gym simulation environment. Fifteen different bottles from ShapeNet were selected, among which 10 were used for training and 5 for testing. The experiment used a system equipped with Intel Xeon Gold 6326 and NVIDIA 3090. In the pre-training stage, the AdamW optimizer was used with a learning rate of 2e-5, and the masking ratios were set to 0.75 for vision, 0.5 for tactile, and 0.5 for action. The reinforcement learning stage adopted two-stage curriculum learning: 1000 iterations for the first stage and 3500 iterations for the second stage, totaling approximately 62 hours.

By comparing baseline methods with different modal configurations, the experimental results showed that: 1) The multimodal joint pre-training method (VTA) significantly outperforms single-modal pre-training methods (V, T, A) and the multimodal method without pre-training (VTA-Scr); 2) Performance improvements from VT to VTA (adding action modal prediction) and VTO (adding object understanding) indicate that both action prediction and object understanding can significantly enhance visual-tactile fusion; 3) VTAO achieved the highest success rate by combining the two modalities, demonstrating the complementary benefits of action prediction and object understanding tasks.

Ablation studies further verified key design choices: 1) Using dual-hand data yielded higher success rates than using only right-hand data, regardless of which modality was removed; 2) The action prediction mechanism significantly improved downstream task performance; 3) The object understanding module is crucial for adjusting dynamic manipulation strategies based on the target object’s state.

3. Experimental Results

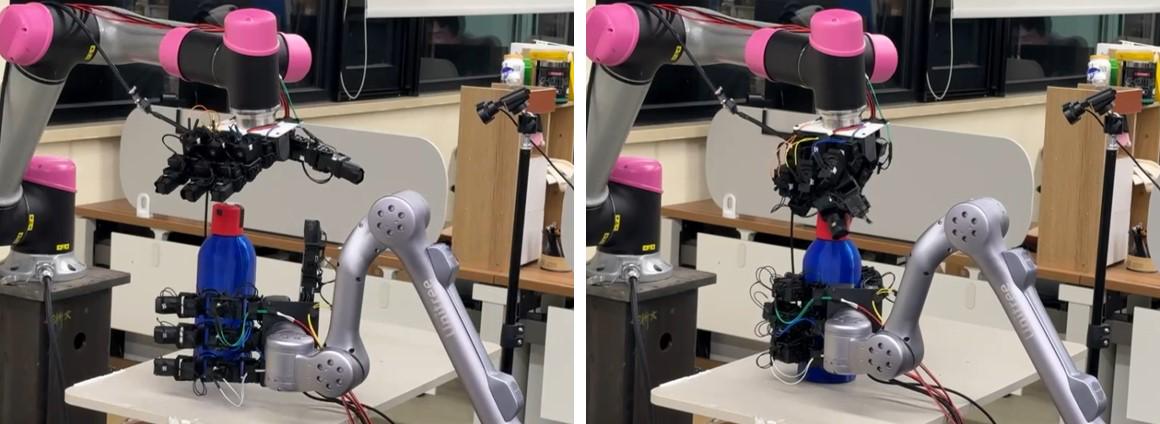

This study shows that the VTAO-BiManip framework successfully achieves dual-hand dexterous manipulation through the combination of multimodal pre-training and curriculum reinforcement learning. The method achieved a 63% success rate (seen objects) and a 51% success rate (unseen objects) in the simulation environment, outperforming existing methods by more than 20%. The research team also verified the method’s effectiveness in the real world, as shown in Figure 6.

Figure 6: Our manipulation platform

4. References

- Liu Y, et al. M2VTP: Masked Multi-modal Visual-Tactile Pre-training for Robotic Manipulation. ICRA 2024.

- Chen Y, et al. Towards human-level bimanual dexterous manipulation with reinforcement learning. NeurIPS 2022.

- Qin Y, et al. DexMV: Imitation learning for dexterous manipulation from human videos. ECCV 2022.

- Rajeswaran A, et al. Learning complex dexterous manipulation with deep reinforcement learning and demonstrations. RSS 2018.

- He K, et al. Masked autoencoders are scalable vision learners. CVPR 2022.

- Chen Y, et al. Visuo-tactile transformers for manipulation. CoRL 2022.

- Li H, et al. See, Hear, and Feel: Smart Sensory Fusion for Robotic Manipulation. CoRL 2023.

- Zhao T Z, et al. Learning fine-grained bimanual manipulation with low-cost hardware. arXiv 2023.

- Kataoka S, et al. Bi-manual manipulation and attachment via sim-to-real reinforcement learning. arXiv 2022.

- Chen Y, et al. Sequential dexterity: Chaining dexterous policies for long-horizon manipulation. arXiv 2023.

- Nair S, et al. R3M: A universal visual representation for robot manipulation. arXiv 2022.

- Brohan A, et al. RT-1: Robotics transformer for real-world control at scale. arXiv 2022.

- Xiao T, et al. Masked visual pre-training for motor control. arXiv 2022.

- Yuan W, et al. GelSight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017.

- Makoviychuk V, et al. Isaac gym: High performance gpu-based physics simulation for robot learning. arXiv 2021.