CN / EN

CN / EN

Case Sharing |CUHK, Shenzhen, School of Data Science: Underwater Mocap Equips Robots with "AI Eyes"

While land and aerial robots have already showcased their capabilities in streets and skies, the oceans—covering 71% of the Earth—remain a "blue ocean" for AI technology. Currently, underwater robot perception and interaction technology has become a key research direction for AI applications in marine fields, facing multiple challenges such as "foggy vision" caused by optical limitations, "signal isolation" due to electromagnetic wave rejection, and scarce underwater training data. Additionally, in unknown underwater environments, cameras become highly myopic at a depth of 1 meter; beyond 10 meters, electromagnetic waves lose their ability to propagate. Existing methods lack efficient algorithm support and simulation verification platforms, making it difficult to cope with the complexity and uncertainty of underwater environments.

To address these issues, Professor Wu Junfeng and his research team, including PhD student Chen from the School of Data Science at The Chinese University of Hong Kong, Shenzhen, conducted the research Equipping Underwater Robots with Perceptive "AI Eyes". Leveraging the high-precision data capture capability of CHINGMU's underwater motion capture camera Series U and supporting underwater motion capture software, the team obtained strong support for algorithm verification, evaluation, and underwater robot training datasets. The research aims to break through the technical bottleneck of the "underwater no-man's land" by integrating multiple sensors such as visual and acoustic sensors, equipping robots with a "marine AI brain" to enable autonomous navigation and task execution in complex underwater environments, thus injecting new possibilities into AI applications in marine fields.

I. Research Plan

Targeting the core pain points of high cost and scarcity of real underwater data collection, the research team innovatively adopted simulation technology to replicate underwater perception environments, providing data support for algorithm iteration. Specific plans include:

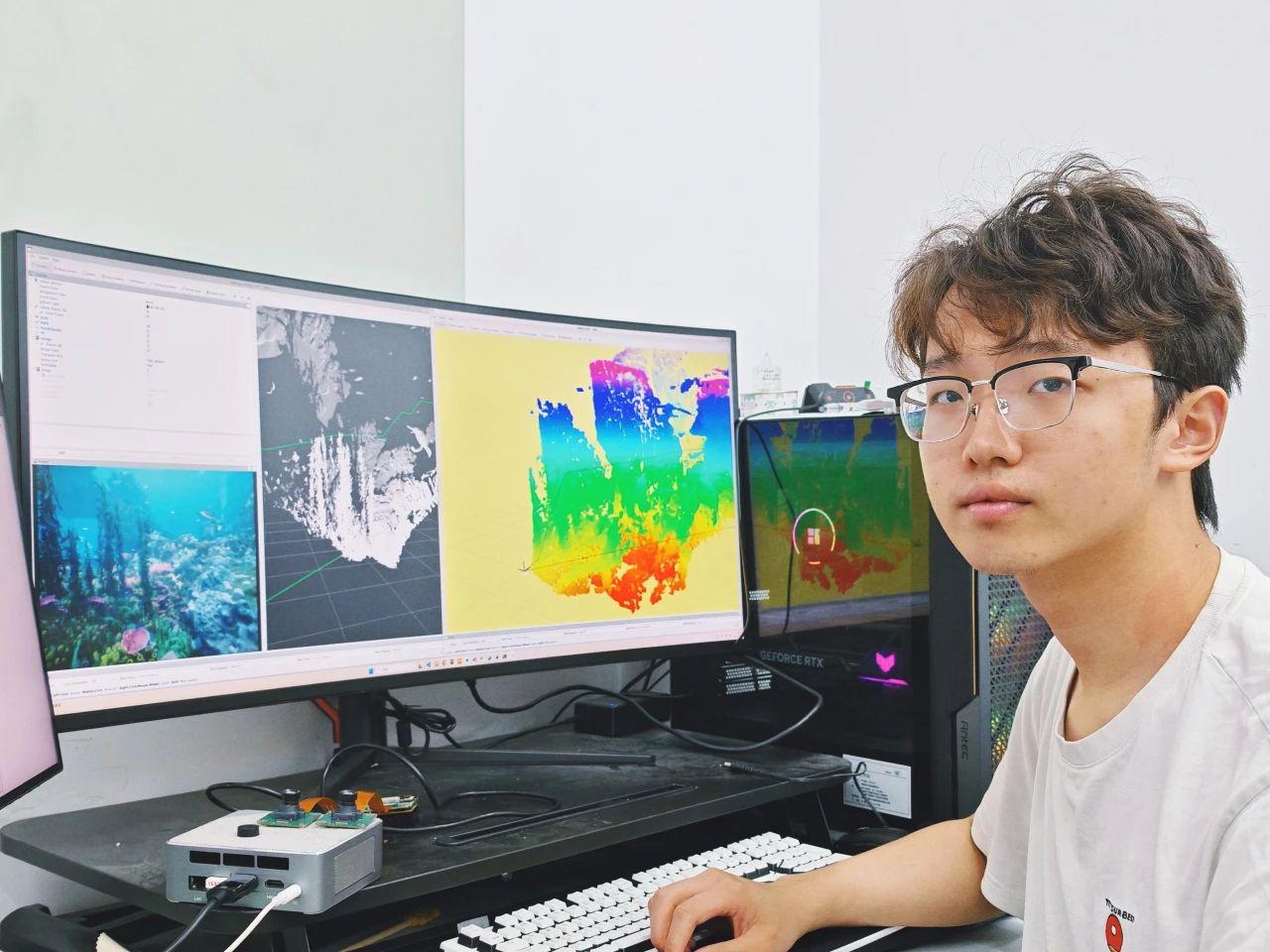

- Construction of UE5 Simulation Test Platform: PhD student Chen built a comprehensive simulation test platform based on UE5. Utilizing UE5's advanced physics engine and rendering system, it generates highly realistic seabed scenes, including details such as complex terrain, coral, aquatic plants, and sediments. The system can simulate underwater environments with varying turbidity and output high-quality depth images and simulated sonar data in real time, laying a simulation foundation for quickly verifying perception, positioning, and navigation algorithms of underwater robots.

- 3D Reconstruction of Seabed Environment: Based on the simulation platform, the team developed a neural network model that combines the robot's prior knowledge of the seabed environment and temporal historical information to achieve a leap from 2D sonar data to 3D spatial understanding. Meanwhile, the system performs spatial registration of acquired sensor information via odometers to realize 3D reconstruction of complex seabed environments.

II. Experimental Verification

The research team verified the technology's effectiveness through two core experiments—sonar positioning and multimodal perception—promoting breakthroughs in deep-sea autonomous detection technology:

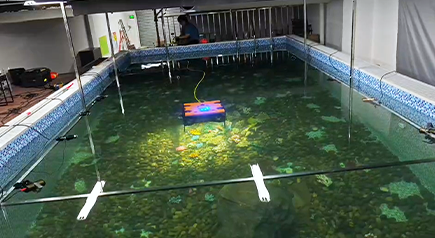

1. Sonar Positioning Experiment - Experimental Design: A calibration device with a precise anti-acoustic structure was laid at the bottom of a square laboratory test pool. A robot equipped with a forward-looking imaging sonar scanned the device while continuously emitting high-frequency acoustic signals and collecting reflection features.

The research team conducting the sonar positioning experiment

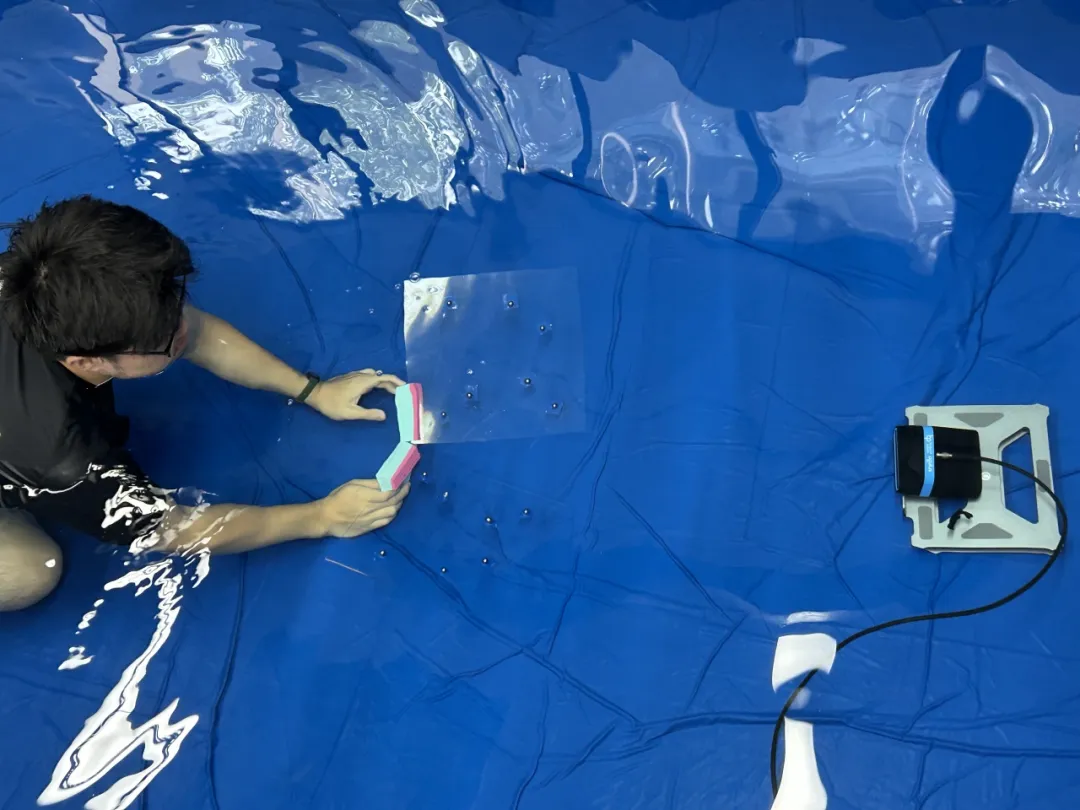

- Algorithm Verification: The robot moved along preset trajectories: a "figure-eight" and the pattern of "LIAS" (abbreviation of "The Laboratory for Intelligent Autonomous Systems" led by Professor Wu Junfeng). The trajectories included typical motion modes such as continuous sharp turns and inertial gliding. By leveraging the robot's pose changes in such complex paths and using CHINGMU's underwater motion capture camera Series U with supporting software, the research team obtained the robot's relevant pose and trajectory ground truth, thereby comprehensively verifying the pose calculation accuracy of the positioning algorithm under complex motion states such as sharp turns and sudden stops.

The research team using CHINGMU's underwater motion capture system to verify the robot's motion trajectory algorithm

2. Multimodal Perception Experiment - Experimental Design:

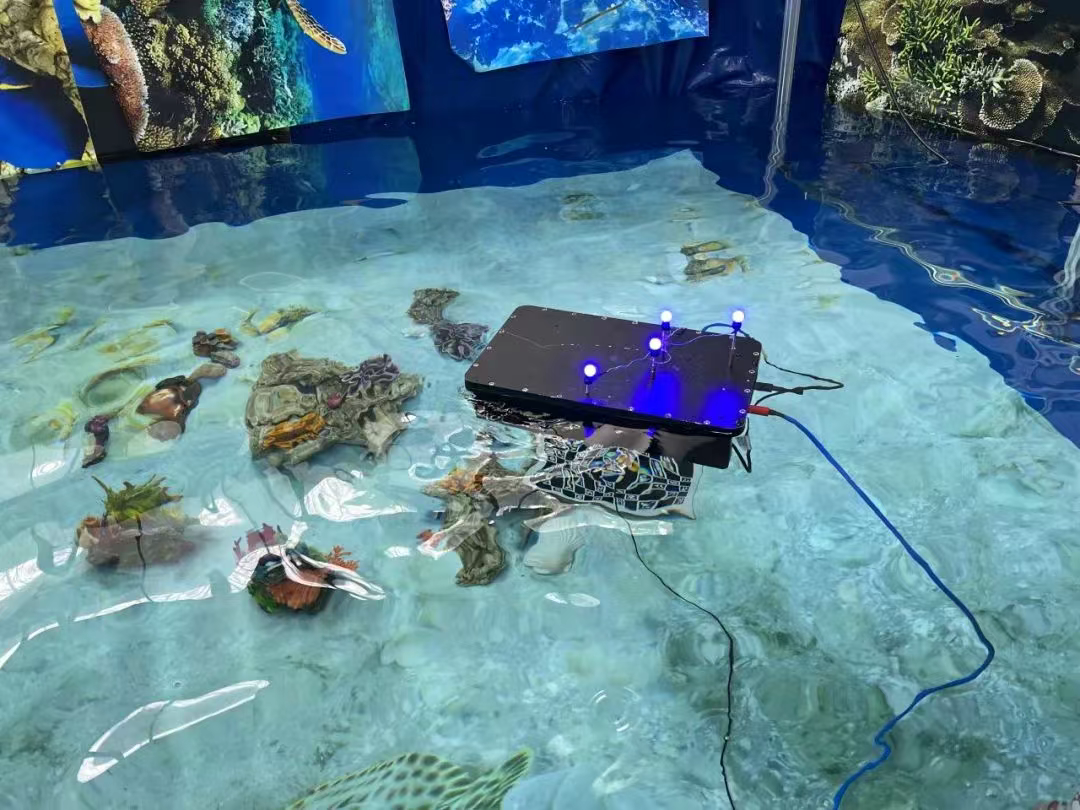

An adjacent experimental area was built as a miniature seabed environment, with fine sand as the base and 3D-printed marine life communities and reef structures.

- Experimental Verification: A robot equipped with a multi-sensor suite executed precise detection procedures:

- When circling reefs along a spiral trajectory, it captured changes in the underwater dynamic light field using the millisecond-response feature of CHINGMU's optical motion capture camera;

- During the uniform cruising phase, a Doppler Velocity Log (DVL) measured the bottom velocity in real time and simultaneously constructed a 3D point cloud;

- Upon entering key survey areas, the robot activated a "cross mode"—performing reciprocal scans along orthogonal axes to achieve spatial registration of optical images and acoustic features.

A single experiment could collect over 50GB of multi-source heterogeneous data. With real pose data from underwater motion capture as supervision indicators, this data is being used to train a new generation of multimodal perception fusion algorithms, aiming to build an "acoustic-optical joint perception" intelligent system for deep-sea robots.

3. Experimental Summary

- Motion capture data collected by CHINGMU's underwater motion capture system was used as ground truth in the sonar and multimodal sensor fusion experiments to evaluate the accuracy and effectiveness of the self-developed algorithms.

- CHINGMU's motion capture system provides ground truth support for the pool dataset, facilitating users to assess the performance of their own algorithms in the dataset.