CN / EN

CN / EN

How is Motion Capture Technology Revolutionizing the Drone Industry?

In recent years, China's drone industry has experienced remarkable growth, expanding its applications across multiple fields, including military operations, scientific research, commercial performances, emergency rescue, environmental monitoring, and logistics. According to a report by China Business Research Institute titled Market Research and Forecast Report on China's Drone Industry (2025-2030), the civilian drone market in China reached RMB 117.43 billion in 2023, marking a year-on-year growth of 32%. This figure is projected to reach RMB 140.92 billion in 2024 and RMB 169.1 billion in 2025. The number of drone operation companies in China has also surged from 7,149 in 2019 to 19,825 in 2023, with a compound annual growth rate (CAGR) of approximately 29.05%.

However, as the drone industry continues to advance rapidly, the market demands for drone performance, safety, and application scenarios are also increasing. Developing drones with high maneuverability, enhanced safety, and the ability to achieve seamless human-machine integration has become a key research challenge and goal for universities, research institutions, and experts worldwide.

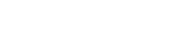

In this context, the application of optical motion capture technology has provided new breakthroughs and research directions for drone development. By enabling precise positioning and tracking, as well as real-time data analysis, this technology offers robust support for drone spatial positioning, obstacle traversal, autonomous navigation, collaborative control, and algorithm validation. Its adoption has significantly improved research efficiency, enhanced drone stability and reliability, and increased the precision and complexity of mission execution.

Applications of Motion Capture Systems in Drones

Spatial Positioning By deploying high-precision infrared cameras to capture marker balls on drones, the system analyzes the three-dimensional spatial position of these markers in real time, providing data such as 3D spatial position and relative position.

Dynamic Analysis The motion capture system can parse 6DOF (six degrees of freedom) data in real time, including yaw, roll, pitch, Euler angles, velocity, and acceleration. These data provide precise support for drone control research, helping to improve flight performance and stability.

Algorithm Validation High-precision positioning and measurement enable the collection of drone trajectory and attitude data, which are critical for validating algorithms related to autonomous obstacle avoidance, traversal control, formation flying, adaptive control, and landing control.

Additionally, motion capture systems can be applied to heterogeneous collaborative control research involving unmanned aerial vehicles/cars, robot dogs, underwater robots, and other platforms, as well as scenarios requiring ground-air or sea-ground-air coordination.

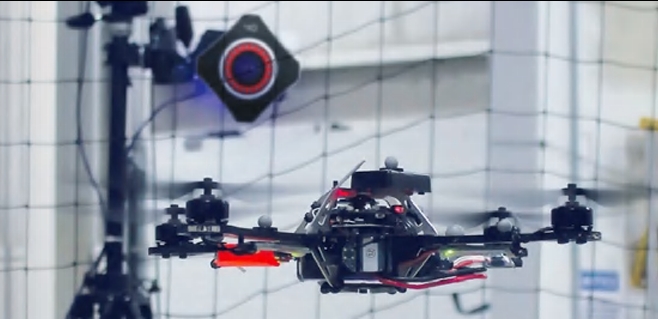

CHINGMU Motion Capture System and Its Applications

The CHINGMU optical motion capture system is characterized by high precision, low latency, wide field of view, and multi-target tracking capabilities. It achieves spatial positioning accuracy of 0.1 mm, angular accuracy of 0.1°, and jitter error of only 0.01 mm. The system can capture rigid bodies, flexible bodies, quadrupeds, and other targets, providing visualized 3D spatial position and 6DoF data. It is suitable for various scenarios, including underwater, indoor, outdoor, and large spaces, and offers customized solutions tailored to specific needs.

In drone research, the CHINGMU motion capture system has gained widespread adoption among universities and research institutions due to its high-precision positioning and stable data output. Institutions such as Fudan University, Shanghai Jiao Tong University, Beijing Institute of Technology, Xidian University, Guangxi University, Shanghai University, and Wenzhou University have utilized this system for drone control research, algorithm validation, and formation flying experiments. By leveraging motion capture technology, these institutions have reduced research costs while ensuring the efficiency and precision of their experiments.

Case Studies

1.Shanghai University, School of Mechatronic Engineering and Automation

To address the challenge of multi-drone collaborative flight, the university conducted research on Path Planning for Dual-Drone Collaborative Lifting Transport Based on Artificial Potential Field-A Algorithm*. The findings were published in Knowledge-Based Systems. Using a combination of Airsim virtual scenarios and real-world testing, the study simulated and experimented with quadcopter collaborative flight control and path planning. To ensure experimental accuracy, the platform was built using the CHINGMU high-precision infrared optical motion capture system.

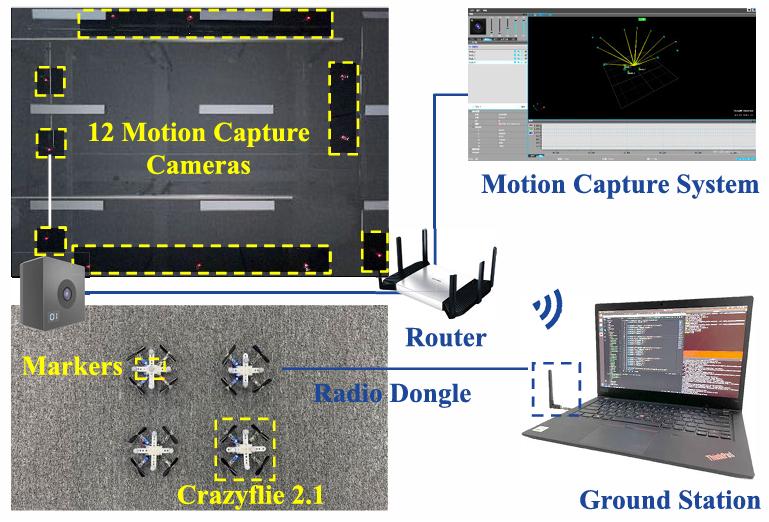

2. Shanghai University of Science and Technology, Machine Intelligence Research Institute

The research team proposed a distributed control method integrating velocity damping and a novel nonlinear saturation function, achieving consensus among multi-agent states without violating input amplitude and rate constraints. The results were published in the internationally renowned control journal IEEE Transactions on Automation Science and Engineering. The experimental setup included the CHINGMU motion capture system, four Crazyflie 2.1 drones, and a Linux-based ground station. Leveraging the system's sub-millimeter positioning accuracy, real-time drone pose and trajectory data were collected, providing precise references for algorithm validation and ensuring experimental authenticity.

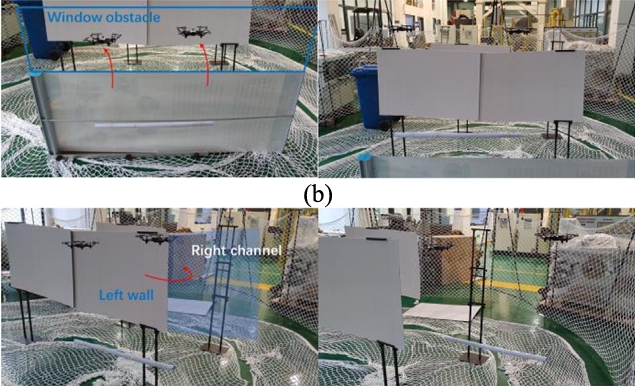

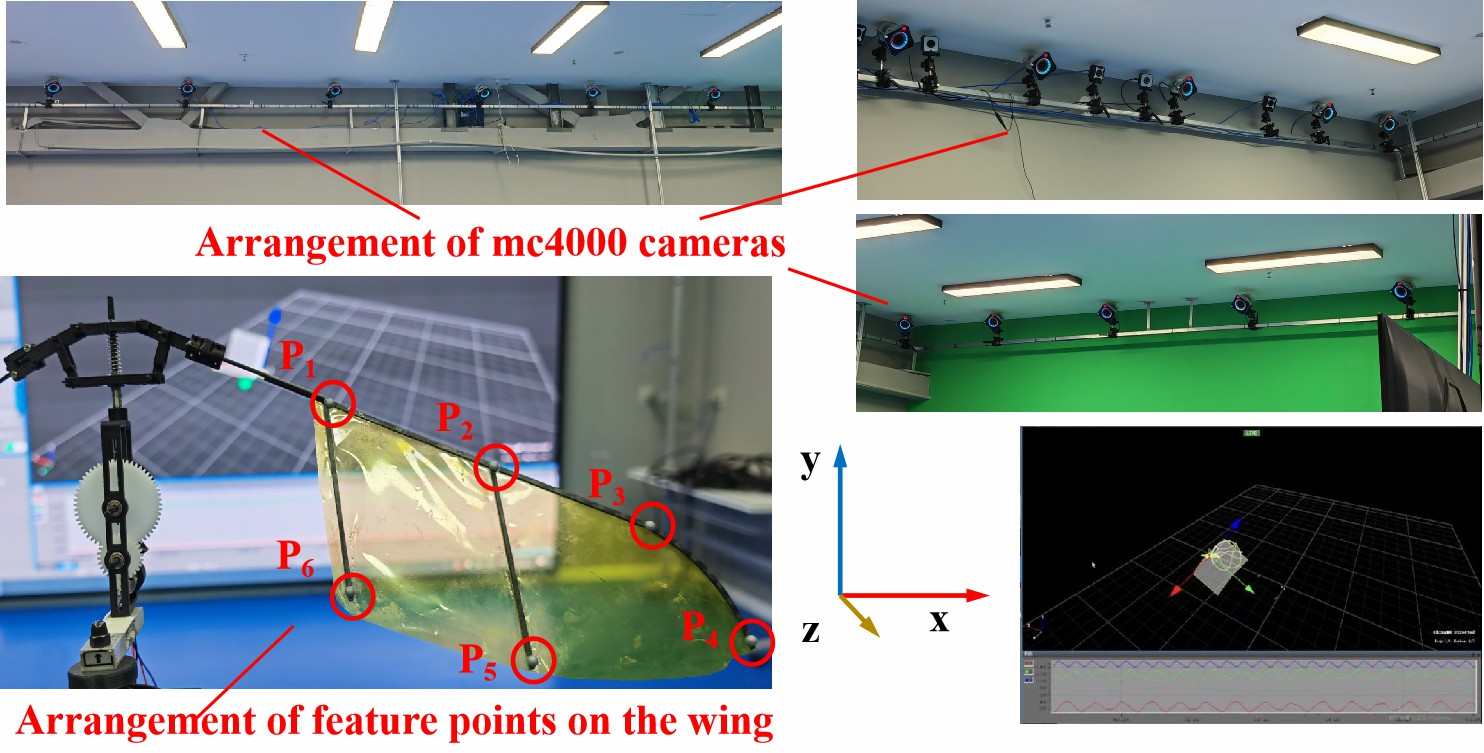

3. Wenzhou University, School of Mechatronic Engineering

To address lift issues in novel flapping wing rotors (FWR), the team conducted research on Enhancement of Aerodynamic Efficiency in Flapping Wing Rotor Systems via Energy Harvesting Technology. The findings were published in SCIENCE CHINA Technological Sciences. The experiment utilized the CHINGMU optical motion capture system to precisely capture the physical motion of the FWR, ensuring reliable experimental data. Based on MC4000 cameras and CMTracker software, the system captured time-varying position data of six fluorescent markers on the wing surface, which were then analyzed to derive wing torsion, rotation, and flapping motion curves.

Beyond drone research, the CHINGMU optical motion capture system is also applicable to studies involving quadruped robots, humanoid robots, dexterous hands, and soft robots, providing precise data support for scientific research and embodied intelligence applications.